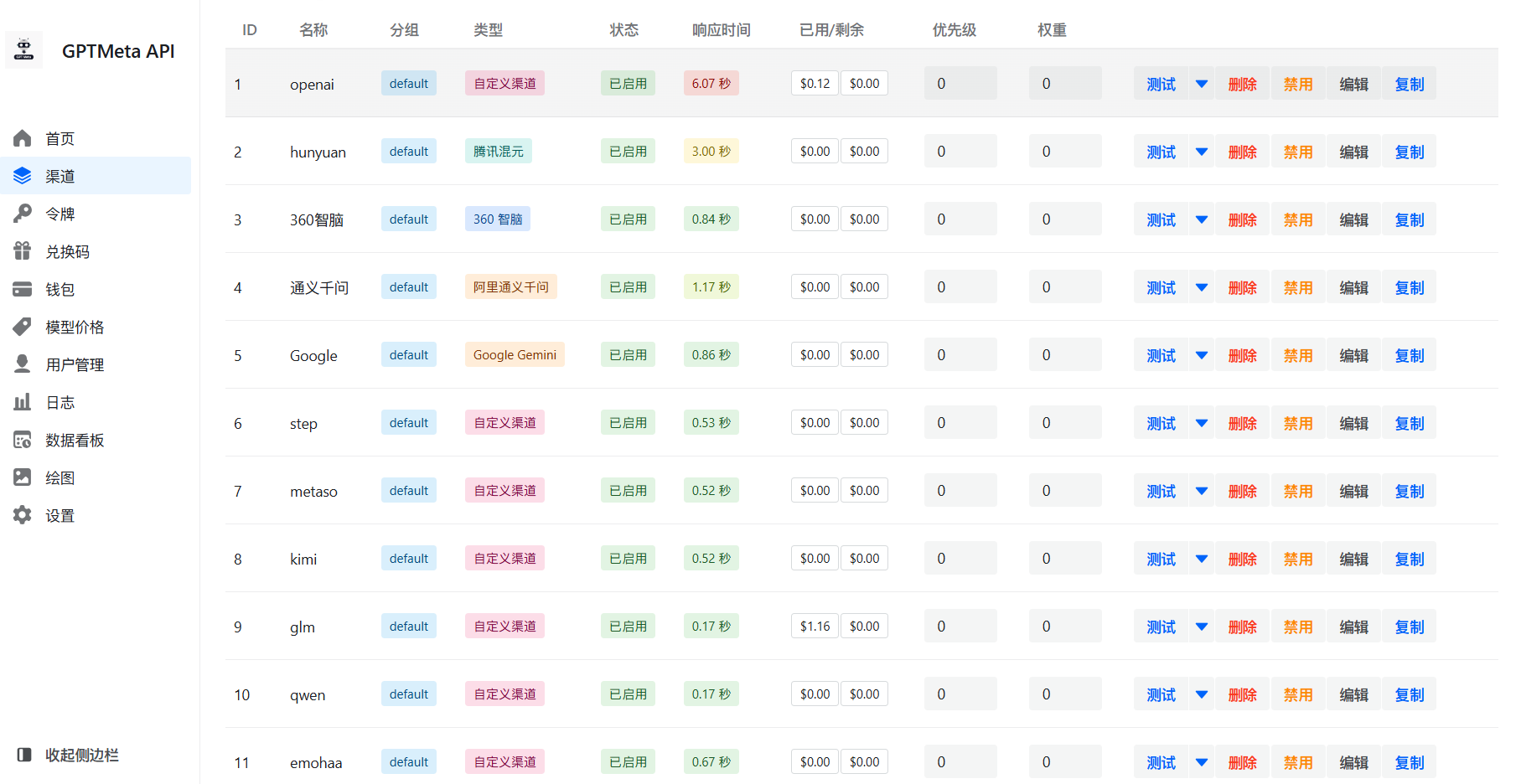

On the basis of Official API transit

brokerage service

In this era of openness and sharing, OpenAI leads a revolution in artificial intelligence. Now, we announce to the world that we have fully supported all models of OpenAI, for example, supporting GPT-4-ALL, GPT-4-multimodal, GPT-4-gizmo-*, etc. as well as a variety of home-grown big models. Most excitingly, we have introduced the more powerful and influential GPT-4o to the world!

affordable

affordable easy use

easy use privacy and security

privacy and security ultra-high concurrency

ultra-high concurrency Low network latency

Low network latency